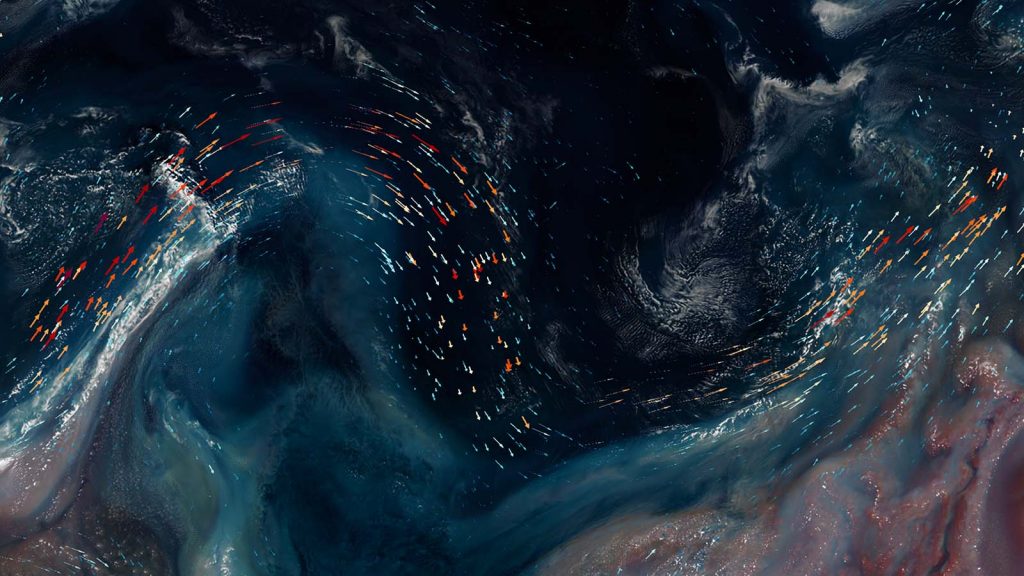

Over 100 scientists to share Argonne’s work in exascale, computing software, artificial intelligence methods and more.

Researchers from the U.S. Department of Energy’s (DOE) Argonne National Laboratory will highlight their work in using powerful supercomputers to tackle challenges in science and technology at SC24, the International Conference for High Performance Computing, Networking, Storage and Analysis, taking place in Atlanta Nov. 17-22.

Argonne’s Aurora exascale system will be spotlighted in a featured talk by Argonne Director of Data Science and Learning Ian Foster at DOE’s exhibit booth. Foster will discuss the AuroraGPT project to train large language models (LLMs) on scientific data. Argonne researchers also will compare the performance portability of a dozen applications on supercomputers that utilize GPUs, including Aurora at the Argonne Leadership Computing Facility (ALCF). The ALCF is a DOE Office of Science user facility.

Other notable activities are highlighted below. For the full schedule of conference involvement, visit Argonne’s SC24 website.

Gordon Bell Prize finalist

A multi-institutional team led by Argonne has been named a finalist for the Association for Computing Machinery’s Gordon Bell Prize for a breakthrough in computational protein design. Researchers developed MProt-DPO, a system that streamlines the design of proteins by focusing on desired traits such as stability and functionality. The innovation lies in its ability to operate on exascale supercomputers that perform over a quintillion calculations per second. This approach accelerates drug discovery and bioengineering by handling vast, complex datasets in record time.

Generative AI models for science

Building and training LLMs for scientific discovery pose significant technical challenges, often beyond the resources of most organizations. Multi-institutional collaborations are essential to ensuring progress. Led by Argonne, the international Trillion Parameter Consortium has organized a half-day workshop to accelerate the development and use of generative AI for science and engineering. Speakers include Argonne’s Rick Stevens, Valerie Taylor, Charlie Catlett, Franck Cappello and Sandeep Madireddy with colleagues from Canada, Japan and Spain.

In another session, researchers Hongwei Jin and Krishnan Raghavan are exploring how LLMs can automatically identify unusual activity in computing processes, making systems more reliable and secure. And Anshu Dubey will co-lead an interactive Birds of a Feather session on the use of foundational LLM technologies for different high performance computing (HPC) targets.

Integrated Research Infrastructure demonstrations

Technical demonstrations in the DOE exhibit booth showcase how Argonne is melding tools, infrastructure and user facilities to advance discovery as part of DOE’s Integrated Research Infrastructure. A live demonstration of the ALCF’s Polaris supercomputer will process data (both online and offline) from Advanced Photon Source experiments in near real-time, using Globus Compute to create end-to-end data workflows connecting instruments with computing resources. Another session showcases the Fusion Pathfinder Project which uses the ALCF and the National Energy Research Scientific Computing Center at DOE’s Lawrence Berkeley National Laboratory to analyze data from a fusion experiment at the DIII-D National Fusion Facility.

Exploiting emerging AI accelerators

The past few years have seen an emergence of specialized accelerators designed to speed up AI applications for scientific discovery. Argonne will participate in two activities highlighting the novel AI accelerators at the ALCF AI Testbed. In a full-day tutorial, Murali Emani, Varuni Sastry and Sid Raskar will present an overview of Argonne’s five state-of-the-art AI-accelerator systems. Emani and Raskar will also join colleagues from the United States, Scotland, Germany and Saudi Arabia in a session exploring trends in AI accelerators, the software support and training offered, and the challenges in running AI applications. The aim throughout is to help build an AI-ready science research community.

Software demonstrations

Argonne researchers have several technical demonstrations scheduled in the DOE exhibit booth. One presentation will introduce the Partnering for Scientific Software Ecosystem Stewardship Opportunities project, which supports software-ecosystem stewardship and advancement. The SciStream demo enables data streaming between science instruments and HPC nodes. Simulator of Quantum Network Communication is a customizable, discrete-event quantum network simulator that models quantum hardware and network protocols. And a team will showcase the Extreme-scale Scientific Software Stack.

Innovations in data compression

When it comes to working with big data, one of the challenges is how to manage and store massive amounts of information. Argonne’s Franck Cappello, Sheng Di and Robert Underwood will discuss improving data compression — a method that reduces the size of data without losing important details — at a full-day tutorial. They also will present a technical paper on the use of lossy compressors on GPUs and another paper with intern Yafan Huang of the University of Iowa that has earned a nomination for the prestigious Best Student Paper Award at the conference.

Experiment-in-the-Loop Computing

The annual Extreme-Scale Experiment-in-the-Loop Computing (XLOOP) workshop focuses on the intersection of HPC and large-scale experimental science. Chaired by Argonne’s Justin Wozniak and Nicholas Schwarz, XLOOP will feature papers and presentations detailing how advances in computing, including new AI data transfer methods, are helping to accelerate discoveries by integrating HPC resources with observational and experimental facilities.

Building a more inclusive community

Beyond the technical innovations, Argonne researchers are also focused on building a diverse and inclusive HPC community. Lois Curfman McInnes and other scientists will participate in discussions about empowering women in the field, sharing their work and inspiring the next generation of leaders in supercomputing. In a session titled “Scientific Software and the People Who Make it Happen,” Anshu Dubey and colleagues will address the question “As teams behind the software become larger and more geographically diverse, how can we build more effective communities of practice?”

Reproducibility in HPC

Another issue facing the HPC community is the reproducibility of digital artifacts. The requirements for specialized hardware and scale often make experimental research products difficult to access and reproduce. To address this, SC established a Reproducibility Initiative requiring authors of submitted papers to describe their significant research products; the Chameleon cloud is the default infrastructure to facilitate artifact sharing as part of this initiative. Kate Keahey, an Argonne senior scientist and Chameleon PI, will lead a Birds of a Feather session on practical reproducibility — the sharing of reproducible digital artifacts in the face of the constraints of real-world applications. Researchers and practitioners will discuss lessons learned from their own reproducibility initiatives and will explore steps to improve artifact sharing and usage. Moreover, in a workshop co-located with SC24, Keahey will give a keynote address discussing how Chameleon’s bare-metal reconfigurability provides an excellent environment for reproducible science.